by David Tompkins

When labs and universities shut down for the COVID-19 pandemic, researchers flocked to remote methods to keep their research moving. For many infant researchers, this meant employing unmoderated looking time experiments through platforms like Gorilla or Lookit. These looking time studies are conducted on the participant’s device and involve the participant watching a prepared sequence of stimuli. The experimenter later receives a webcam recording and then codes where on the screen the child appeared to be looking. Although these methods played a critically important role in allowing research to continue during the shutdown and may have the potential to help researchers reach new participant pools, they may also introduce yet-to-be-considered new challenges and new sources of variation in participants’ performance on our tasks.

There are many salient reasons to expect some challenges or increased variability in remote testing. Each participant views our stimuli in spaces with different distractions and on devices with different settings and dimensions. It is reasonable to expect that participants in homes with barking dogs or crying siblings might pay a different amount of attention to the screen than their peers in quiet (although unfamiliar) laboratory spaces.

An overlooked source of differences can be found on the participant’s screen itself. Although researchers have considered and attempted to account for differences in the size of the monitors (and the accompanying differences in size of the stimuli), volume, brightness, and so on, much less attention has been given to whether what appears on the participants’ screen is precisely what we intend to appear. In part, this is because for most online testing, only the infants’ webcam is recorded. On most platforms, it is impossible to view what is actually on the participant’s monitor. When researchers do monitor what is on the screen—for example, during moderated remote sessions—they use what is seen on their own screen as a guide, not a recording of what actually appeared on the participant’s screen. When we analyze looking time data, we assume that we know what was actually visible on the participant’s screen. However, because most platforms don’t record the participant’s screen, we are relying on our systems working as intended.

When we converted some of our in-person studies to an online format at the start of the pandemic, we used Gorilla, and wrote a custom script to record not only the webcam but also capture what was on the participants’ screen.* We were very surprised by the variation in what the participants saw on their screen.

We collected videos from 97 children 12-48 months of age in 2020-2021.** These videos were collected on the Gorilla platform with a custom script that used the RecordRTC JavaScript package. Our task was approximately six minutes in length and combined trials with left/right stimuli and trials with top/bottom-left/bottom-right stimuli.

Of the videos of the 97 sessions, only 19 displayed the stimuli entirely as intended throughout the session. An additional 40 displayed the stimuli as intended but had a visible mouse cursor during some or all of the trials. The remaining 38 videos had further deviations during the session. These deviations varied from a momentary distraction that affected a single trial to a deviation that was present throughout the session. As many infant researchers use Lookit for similar studies, we attempted to recreate these issues in the Lookit platform from the participant perspective.

We saw the following issues in the videos collected on Gorilla, arranged by frequency. We note below whether we were able to recreate these same issues on Lookit:

- Mouse cursor visible on screen (72 of 97 videos). This was usually a fairly minor distractor, left by the parent somewhere on the screen. Sometimes however, the child seemed to take control of the mouse, moving it about over the stimuli. This was the sole issue in 40 of 97 videos, and is an issue that could have been prevented through programming. Lookit offers the capability to hide the cursor and researchers who use that feature should not encounter this issue.

- Missing Stimuli (9 of 97 videos). Several participants were missing stimuli (either a full trial or only a single side) for specific trials. This appeared to be a loading issue – as it followed a delay in loading a new trial in each case. It’s possible this was an issue with the platform or with participant’s internet connection.

- Right click somewhere on the screen (7 of 97 videos). Occasionally the child managed to right click somewhere on the screen, bringing up the options menu and obscuring part of the screen. We asked caregivers to close their eyes during the task, so they did not always see if their child took the task in an unexpected direction. Unlike the mouse cursor, this is not easily hidden, and we were able to recreate the issue on Lookit.

- Volume changing mid-task (6 of 97 videos). Some participants found the volume up and down buttons and either silenced or maximized the task volume. We were able to recreate this on Lookit.

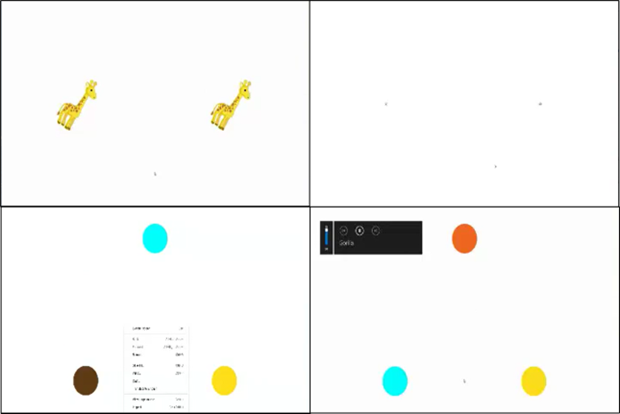

Image 1 – (Top-Left) A mouse cursor is visible in the lower center of the screen. (Top-Right) The two stimuli images failed to load for this participant, leaving a mostly blank screen. (Bottom-Left) The right-click options menu is visible in the lower center of the screen. (Bottom-Right) The volume adjustment and media player window is visible in the upper left part of the screen.

- “Now Sharing” pop-up visible (6 of 97 videos). Our method for capturing the participant screen created a pop-up at the bottom of the screen. We requested that participants click the “Hide” button and most obliged, but for 6 participants this pop-up was visible throughout the session, rendering the session unusable. This was specific to our screen capturing method.

- Opened either the Windows search bar or the Mac applications dock (5 of 97 videos). This happened when the child hit a single button on Windows or dragged the mouse down on Mac computers. We were able to recreate this on Lookit, though it may vary by operating system/version.

- Plugin-related image overlays (5 of 97 videos). A few participants appeared to have a plugin – for example, the Pinterest plugin – active. These plugins placed colorful icons on top of our stimuli. We were able to recreate this on Lookit.

- Navigated away from the page (5 of 97 videos). This happened in different ways, including what appeared to be hitting ‘Alt+Tab’ on Windows. In our study, navigating away from the page did not pause the task. Within Lookit, however, actions that broke the full screen view also paused the task. This meant that actions like opening a new tab or printing the page would pause the task but hitting Alt+Tab would not.

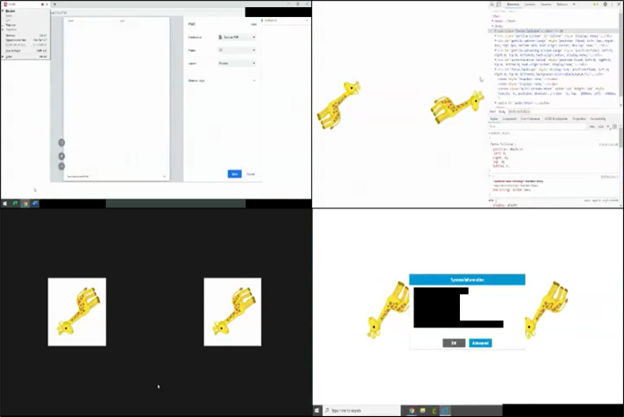

Image 2 – (Top-Left) A pop-up that indicates screen sharing is visible in the lower center of the screen. (Top-Right) The Windows start menu is visible, obscuring the left side of the screen. (Bottom-Left) The Mac applications dock is visible along the bottom of the screen. (Bottom-Right) Icons are visible in the upper-right and upper-left of the screen. We believe these are generated by the Pinterest Plugin.

- Revealing the icon to exit a full screen view (4 of 97 videos). We required participants to maximize their view of the task. By dragging their mouse pointer to the top of the screen, some children revealed a large X icon that could exit the full screen view. We were able to recreate this on Lookit.

- We also saw a number of unique and interesting issues:

- One child attempted to print the experiment (after first checking their security settings)

- One child opened the developer console and briefly inspected the website code

- One child opened their system information, then attempted to activate ‘Sticky Keys’

- One session had involved an email notification appearing mid-session.

- One session was completed in ‘dark mode’ (presumably via some plugin).

- A mysterious black line flashed above the right side of one participant’s stimuli throughout the task (We think this may have been a giant text entry cursor).

- Two sessions were completed on computers with persistent warnings overlayed on their screen – possibly indicating that their version of Windows had not been activated.

- One caregiver shared their email tab instead of the task. We believe the participant completed the task but are not sure precisely what they saw.

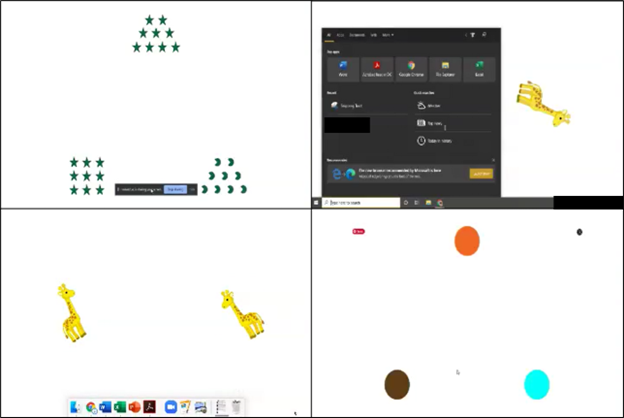

Image 3 – (Top-Left) A menu for printing the page covers the experiment entirely. (Top-Right) The developer console is open on the right side of the window, offsetting the stimuli. (Bottom-Left) The background color of our experiment has been changed from white to black, presumably by a plugin. (Bottom-Right) A system information dialogue covers the center of the screen. We have censored potentially identifiable information in these images.

While these errors paint a fun picture of young children’s haphazard and presumably unintentional keyboard tapping, they also challenge our assumptions about the validity of our looking time analyses. When a child looks to the right side of the screen, they might be looking to the stimuli on that side, or they might be attending to the mouse, the icons overlayed by a plugin, or an unexpected pop-up.

We found some form of distracting screen element in the majority of participants, but there was a high level of variability for what proportion of the session that the distractor was present. Some distractors (such as the presence of a mouse pointer) tended to persist across all trials of a participant, while others (such as missing stimuli or an email notification) affected only a few trials. They also varied in terms of severity. Ultimately a mouse pointer left at the bottom of the screen is not a terribly distracting item (especially when compared to other distractions in the house) but missing stimuli, opening a new tab, or viewing an email browser instead of the stimuli are obviously exclusion-worthy events.

There are good and clear ways to mitigate these issues (programmatically hiding the mouse cursor as done on Lookit, for example), but they will be challenging to overcome entirely. Many of the issues we saw were simply caused by the presence of a small child in front of a computer while their caregiver’s eyes were closed. The most common non-mouse related issue was missing stimuli – a serious issue without easy mitigation. So, while asking caregivers to close other applications, disable plugins, and move their desktop keyboards are good recommendations to improve data quality, they might be insufficient. Ultimately, many of these issues are part of the trade-offs involved in conducting unmoderated looking time studies. The challenge is how can researchers have more confidence about what is on the screen during testing. One solution is obviously the one we chose; modify the program used to collect data to include screen recording as well as webcam recording. This may not be possible for all users, however. To be clear, we do not believe that these issues are serious enough to consider abandoning all unsupervised remote testing. Rather, it is important that researchers understand this source of error in their data and consider what steps they can take to reduce the occurrence of unintended deviations in what children see during such procedures.

Thanks to Dr. Marianella Casasola, Dr. Lisa Oakes, Dr. Vanessa LoBue, Annika Voss, Mary Simpson, and the Cornell Play and Learning Lab research assistants who gave input on this draft or were involved in collecting this data. The project that this post is based on was supported by NSF Award BCS-1823489, and we greatly appreciate their support in our research.

Footnotes:

* We had incorporated screen recording primarily as a way to ensure the timing of our stimuli was accurate and to facilitate aligning our coding of the webcam recording with the start of the trial.

** A total of 120 participants participated, but we excluded the remaining participants either for demographic reasons (e.g., age) or because they lacked webcam recordings. We did not review their screen footage.

About the Author

David Tompkins

Cornell University

David Tompkins is a graduate student in the Human Development program at Cornell University. He works in Dr. Marianella Casasola’s Play and Learning Lab. He is interested in understanding how child development is affected by societal change and how that change might affect researchers’ ability to understand development.